Close

By Giannis Fyrogenis, Project Manager at reframe.food

The image of a driverless tractor, tilling a field with digital exactitude, has become a potent symbol of agriculture’s high-tech future. This vision, central to initiatives like the Smart Droplets project, which aims to revolutionize precision spraying, promises a world of optimized yields and dramatically reduced environmental impact. Central to this automated idyll is the ability of these robots to know precisely where they are. For decades, Global Navigation Satellite Systems (GNSS), including GPS, have been the bedrock of this precision, enabling critical tasks such as autonomous guidance and the precise application of inputs, as detailed in foundational agricultural technology documentation [1].

Figure 1. Beneath the dense canopy, failing satellite signals highlight the critical need for resilient navigation in modern agriculture.

But what happens when the view to the heavens is obscured? As agricultural robots venture deeper into the complex tapestries of real-world farms—the dense canopies of apple orchards, the trellised rows of vineyards, or fields bordered by signal-reflecting buildings—their satellite lifeline often frays. Standard GPS, for instance, typically yields accuracy only within several meters (a limitation documented in established performance evaluations [2]), an accuracy level insufficient for many precision tasks. Dense crop canopies significantly obstruct and attenuate GNSS signals, a particular problem for under-canopy navigation detailed in field robotics research [3]. Furthermore, reflections from farm structures or even wet ground create “multipath errors,” confusing receivers about their true location, an issue also documented in analyses of GNSS performance [2].

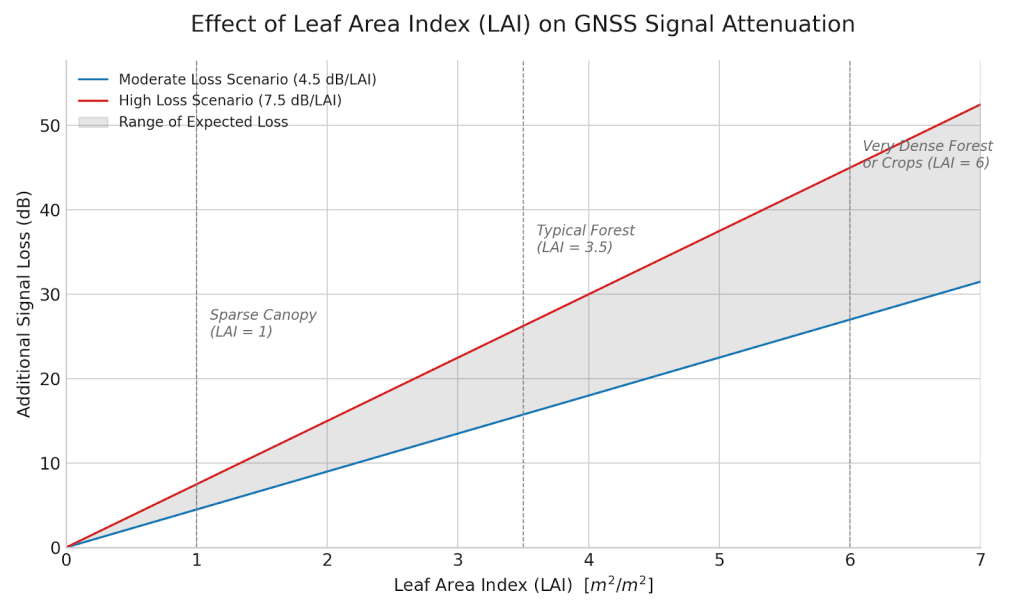

This signal attenuation is not trivial; it is a direct, physical process caused by the absorption and scattering of satellite signals by water in the foliage [10]. The effect can be quantified using a standard ecological metric, the Leaf Area Index (LAI), which measures the layers of leaf area relative to the ground area. As Figure 2 illustrates, there is a strong, direct relationship between canopy density (LAI) and signal loss. A loss of 10-20 decibels (dB), common in moderately dense canopies, represents a 90-99% reduction in signal power, often sufficient to disrupt or completely prevent the centimetre-level RTK lock required for precision tasks. This quantifiable signal degradation is a primary driver behind the need for the GNSS-independent navigation solutions being developed by projects like Smart Droplets.

These are not minor technical quibbles; they translate into substantial operational headaches. Even advanced RTK-GNSS (Real-Time Kinematic positioning) systems are susceptible; research by Inglis (2006) [4] demonstrated that system latencies can induce positional errors of 20-30 centimeters at typical operational speeds, potentially leading to crop damage or misapplication of expensive inputs like those Smart Droplets aims to optimise. Degraded GNSS accuracy, it’s widely reported, leads to deviations from planned paths, resulting in wasted resources. Such unreliability, alongside infrastructural issues like the lack of consistent 4G/5G cellular coverage in many rural areas (which impacts RTK systems relying on network corrections, as noted in industry analyses [5]), erodes farmer trust and acts as a significant barrier to the wider adoption of autonomous systems. The challenges of achieving reliable positioning in areas with limited GPS coverage are explicitly recognised as a major hurdle for agricultural autonomy, according to detailed academic work [6].

Figure 2. Beneath the dense canopy, failing satellite signals highlight the critical need for resilient navigation in modern agriculture.

It is precisely this navigational uncertainty that pioneering efforts like the Smart Droplets project are designed to confront. Focused on delivering a holistic, AI-powered crop spraying solution to reduce chemical use and support farmers in line with EU Green Deal objectives, Smart Droplets inherently relies on its autonomous platform navigating reliably through real-world apple orchards in Spain and wheat fields in Lithuania. For such a system to be truly effective, it cannot be hamstrung by the vagaries of satellite signals. Thus, the quest for robust alternatives is not just an academic exercise but a practical imperative.

This drive for resilient navigation is central to the Smart Droplets project. In its demanding field trials, such as those in Spanish apple orchards, the project is actively integrating and validating advanced sensor fusion techniques. Early indications from these real-world tests suggest that by combining data from sensors like LiDAR and IMUs, the autonomous platforms can achieve significant improvements in path accuracy under heavy foliage compared to relying on RTK-GNSS alone in such conditions. This pursuit of resilience, aiming for consistent centimeter-level precision, is essential for the project to achieve its goal of true field autonomy for its spraying systems. Partners within the consortium, like the Eurecat technology centre, are key to developing and refining these GNSS-independent navigation capabilities, teaching the robots to effectively “see” and interpret their surroundings.

Why is this push for GNSS independence so crucial? The benefits, as explored through analysis of various emerging technologies, are transformative:

Several promising technological avenues are being pursued. Integrating GNSS with IMUs, for example, is a foundational approach, where the IMU bridges short GNSS gaps, though IMU drift remains a challenge (as noted in sensor fusion studies [7]). Vision systems, too, are showing significant potential. Research highlights systems like BonnBot-I [6], which use cameras and visual-servoing to follow crop rows with centimeter-level accuracy. Smart Droplets, in its own development, leverages AI and vision for tasks like real-time threat detection, where accurate navigation is essential for mapping and action.

LiDAR, creating precise 3D environmental maps, excels under canopies where GNSS struggles. Field robotics studies [3] show LiDAR combined with IMU dramatically improved under-canopy navigation autonomy. This aligns with the advanced navigation work undertaken by Smart Droplets partners, aiming for robust operation in all farm conditions. The most sophisticated solutions often involve integrating multiple sensors using advanced SLAM algorithms, achieving significant error reduction in GNSS-denied scenarios (as documented in integrated navigation system research [7, 8]).

The increasing role of Artificial Intelligence in fusing these diverse sensor inputs and enabling machines to better understand complex agricultural scenes is a key theme [5, 1, 6, 9]. For Smart Droplets, AI is not just a navigational aid but core to its entire value proposition, from interpreting sensor data for crop health to optimizing the spraying commands delivered by its advanced Direct Injection System.

However, the path to ubiquitously resilient farm robot navigation is still being paved, with challenges like sensor costs, system complexity, and rural connectivity remaining [5]. Initiatives like Smart Droplets, by aiming to advance technologies to higher readiness levels and by incorporating training through its Academy, are part of the broader effort to make these sophisticated systems more practical and accessible.

Strategic recommendations emerging from in-depth analyses call for continued research into robust sensor fusion, standardiσed benchmarking, and the development of cost-effective, user-friendly systems. The success of focused projects, like Smart Droplets, which are at the forefront of integrating these diverse technologies into cohesive, field-tested solutions, will be instrumental. Their progress in overcoming these fundamental navigational hurdles is not just about smarter robots; it’s about unlocking a more sustainable, efficient, and resilient future for agriculture. As these machines learn to confidently tread their own paths, beyond the exclusive guidance of distant satellites, they will truly begin to reshape how we grow our food.

References

[1] Advanced Navigation: Enhancing Precision Agriculture with ..., accessed June 4, 2025, https://www.unmannedsystemstechnology.com/feature/advanced-navigation-enhancing-precision-agriculture-with-autonomous-systems-ai/ [2] (PDF) Performance Evaluation of GNSS Position Augmentation ..., accessed June 4, 2025, https://www.researchgate.net/publication/365067597_Performance_Evaluation_of_GNSS_Position_Augmentation_Methods_for_Autonomous_Vehicles_in_Urban_Environments [3] Multi-Sensor Fusion based Robust Row Following for Compact Agricultural Robots - Field Robotics, accessed June 4, 2025, https://fieldrobotics.net/Field_Robotics/Volume_2_files/Vol2_43.pdf [4] sear.unisq.edu.au, accessed June 4, 2025, https://sear.unisq.edu.au/2242/1/INGLIS_Rientz-2006.pdf [5] Robots in Agriculture: Transforming the Future of Farming - Fresh ..., accessed June 4, 2025, https://www.freshconsulting.com/insights/blog/robots-in-agriculture-transforming-the-future-of-farming/ [6] bonndoc.ulb.uni-bonn.de, accessed June 4, 2025, https://bonndoc.ulb.uni-bonn.de/xmlui/bitstream/handle/20.500.11811/13077/8261.pdf?sequence=2&isAllowed=y [7] INS/LIDAR/Stereo SLAM Integration for Precision Navigation in ..., accessed June 4, 2025, https://www.mdpi.com/1424-8220/23/17/7424 [8] Deep Learning-Aided Inertial/Visual/LiDAR Integration for GNSS ..., accessed June 4, 2025, https://www.mdpi.com/1424-8220/23/13/6019 [9] The development of autonomous navigation and obstacle ..., accessed June 4, 2025, https://www.researchgate.net/publication/338283665_The_development_of_autonomous_navigation_and_obstacle_avoidance_for_a_robotic_mower_using_machine_vision_technique [10] Recommendation ITU-R P.833-10: Attenuation in vegetation, accessed June 5, 2025, https://www.itu.int/rec/R-REC-P.833/en